我今天去了 Anime Expo。

其实本来没这个计划的。懂我的人大概知道为什么北美最大的日本ACG展会就在家门口但我从来也不去。虽然我并不确定是否有人懂我……

然而几个星期以前老板开会的时候随口提到我又在家扮猫娘,引起了来帮忙的隔壁组很高很漂亮的 mtf 小姐姐的兴趣。她怂恿我去。

“反正你这一身感觉也挺凉快的”

“他们不会在乎好不好看”

是这样的吗?大概是?七年前去 Anime Expo 的时候见过怎样的 cosplay 我已经不太记得了。当时也是被另一个组的师兄拉去。我唯一记得的事是头一回在现实生活中听到有人说“肉便器”……

那就去吧,毕竟难得有人找我这个 forever alone 的宅居动物出去玩。

而且室友X这次也不在家。要不然他是肯定要去的。一身猫娘装跟室友X一起出门实在是有点尴尬……

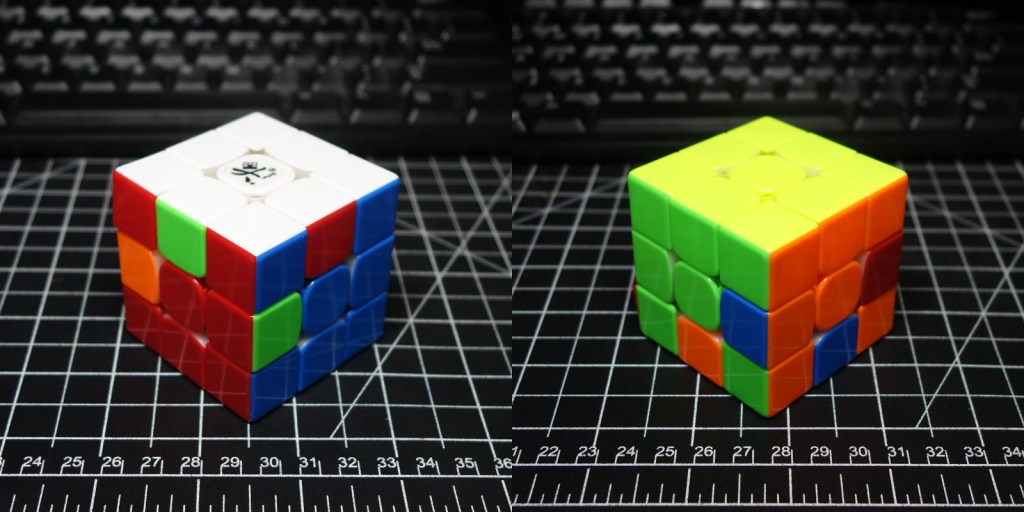

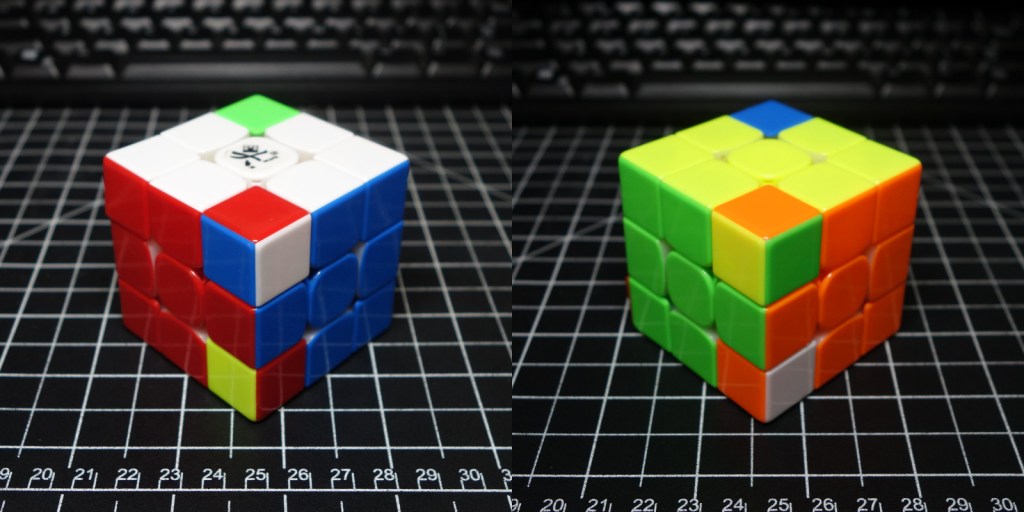

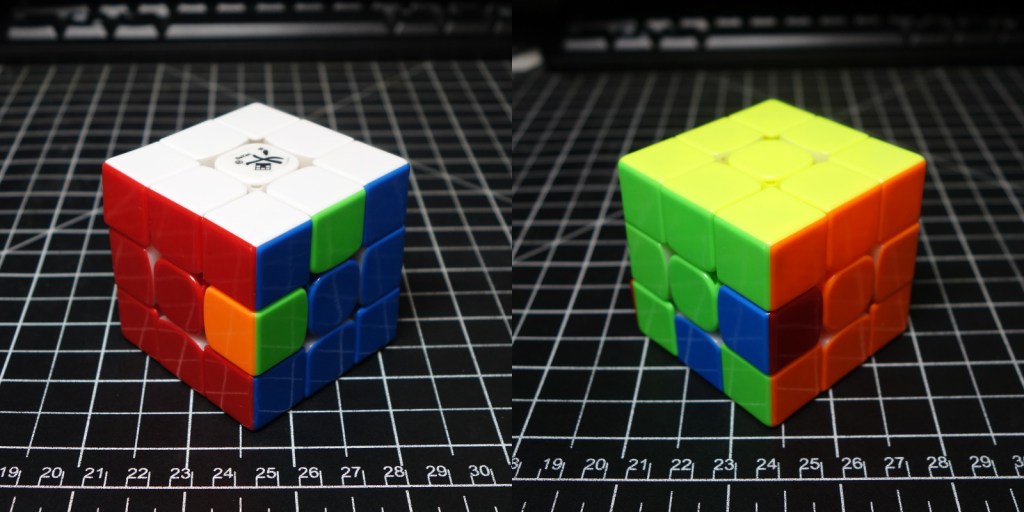

于是我就去了。只买了一天的票,因为其实也没有什么特别想关注的内容,就是随便逛逛。早起打扮好座公交去 LA Convention Center。我觉得有点玄幻。因为 SIGGRAPH 每隔一年在 LA 举办的时候会场也在这。这两件事其实满有关系,毕竟图形学可以用来做动画。但好像又很难想象把这两个东西放在一起……

还没到门口,只见有一个人举着喇叭在传教还是啥的。我路过的时候差点笑出声,because this place surely is full of sin……

我一边走一边就很 torn。因为我的大脑坚持认为寻求 sensual pleasure 是极大的错误,and that’s even without God telling me so。

这种 tension 已经持续了不知多久。十年。十五年。想当年高中的时候班里有人秀恩爱我还会说“克己复礼”,都成了班里的一个梗了。活该我喜欢的妹子被我看着不顺眼的哥们追到而我还是个处男。

然后它从高中持续到本科,又持续到博士,一直到现在。四年前的那篇回忆录里我已经做了详细的检讨,但我终于还是一点也没有变。于是乏味的生活就这样持续着。

虽然有些年头不怎么看动画了,但转了一转之后发现其实还是有不少东西能认出来。大概是因为原神和一些 VTuber 之类的铺天盖地,光看 AV 都该认识了吧……

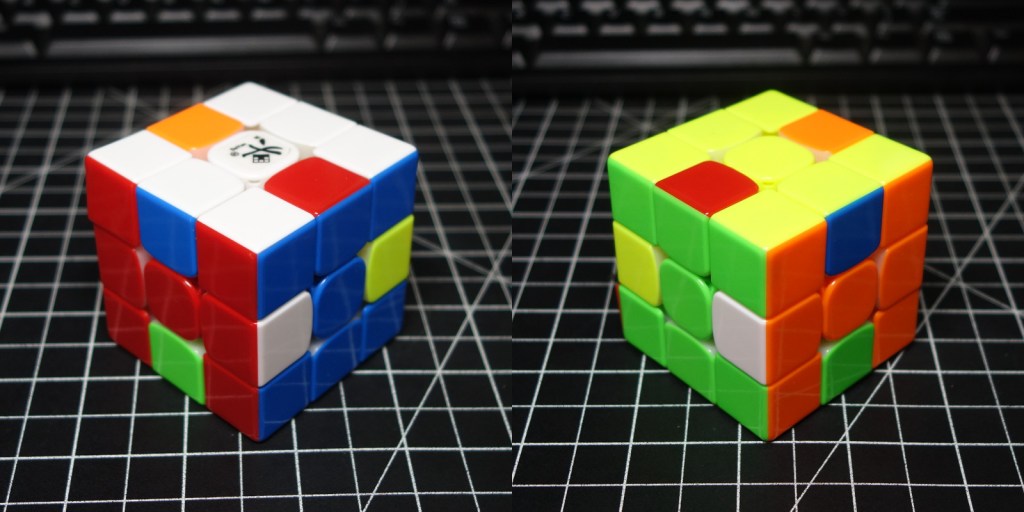

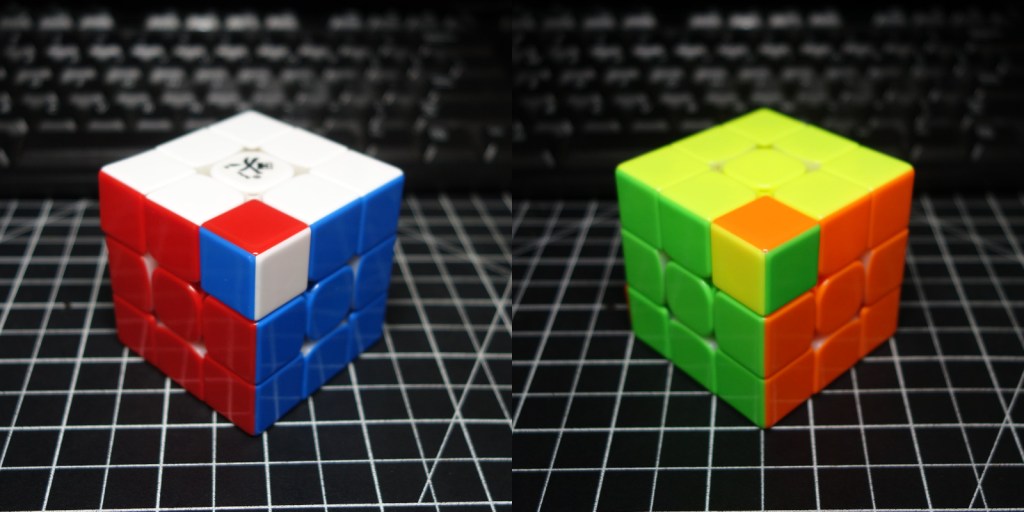

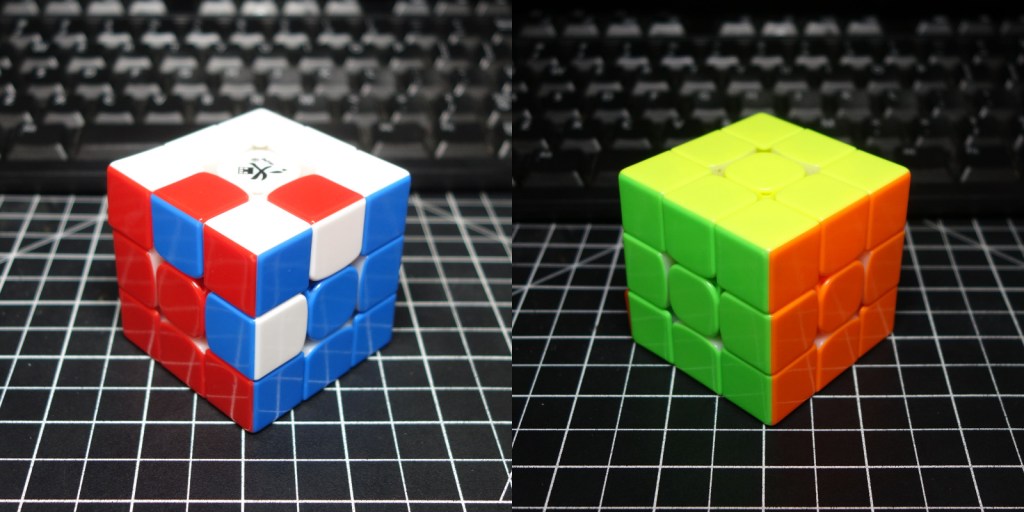

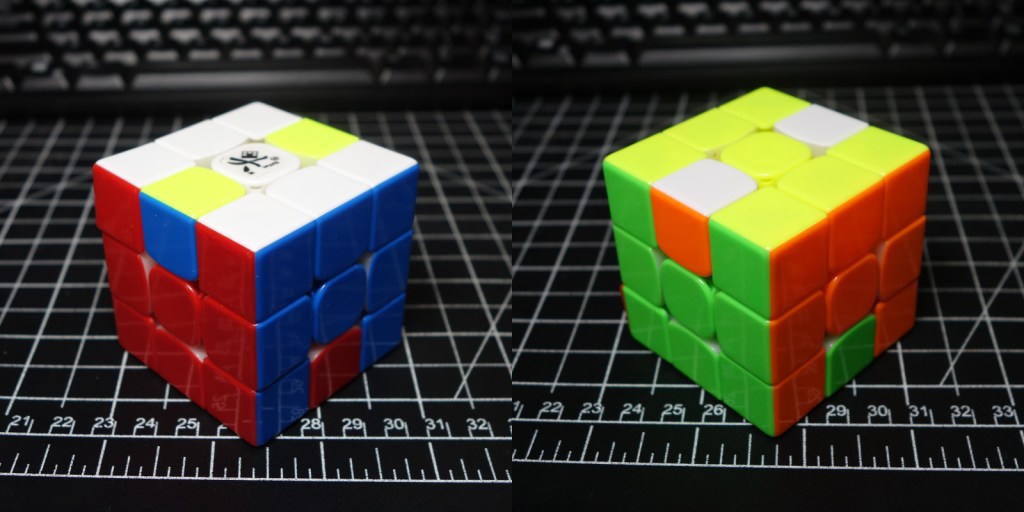

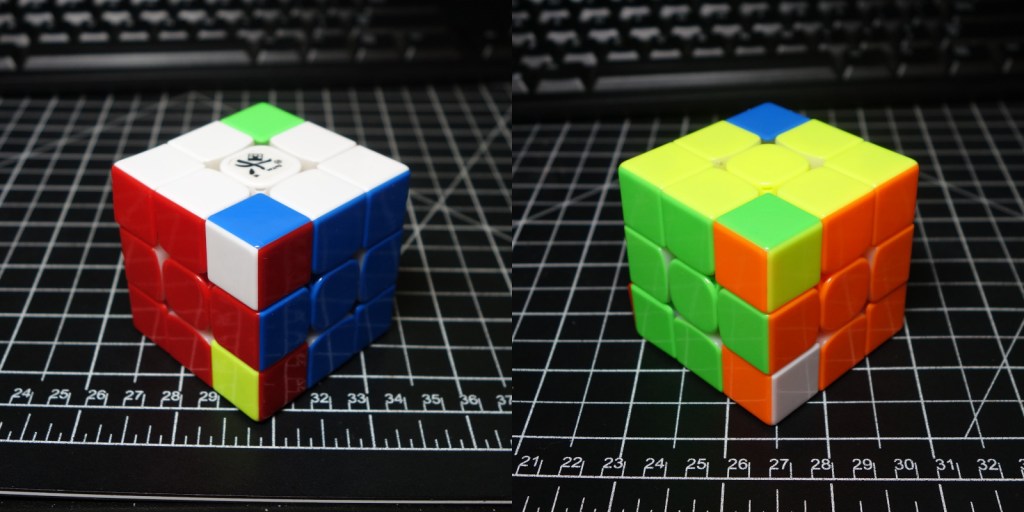

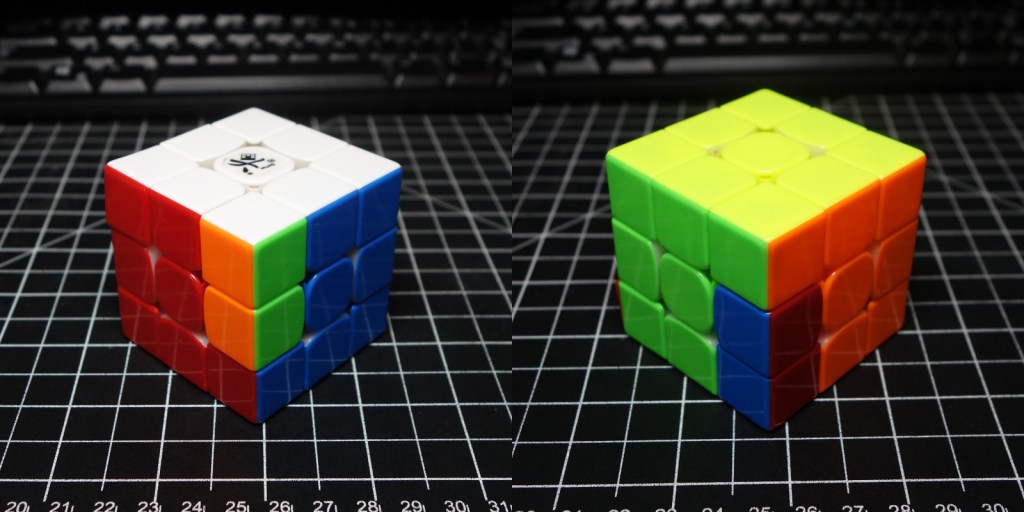

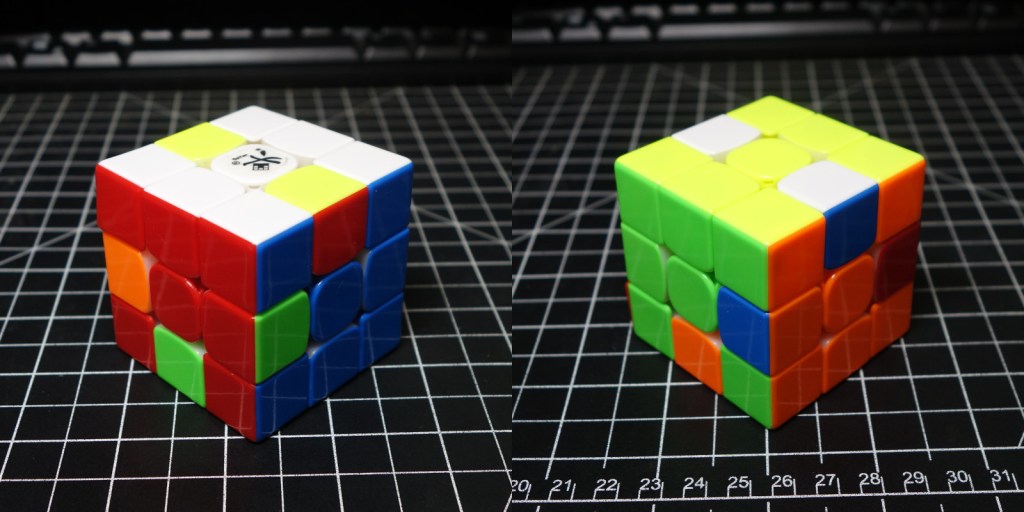

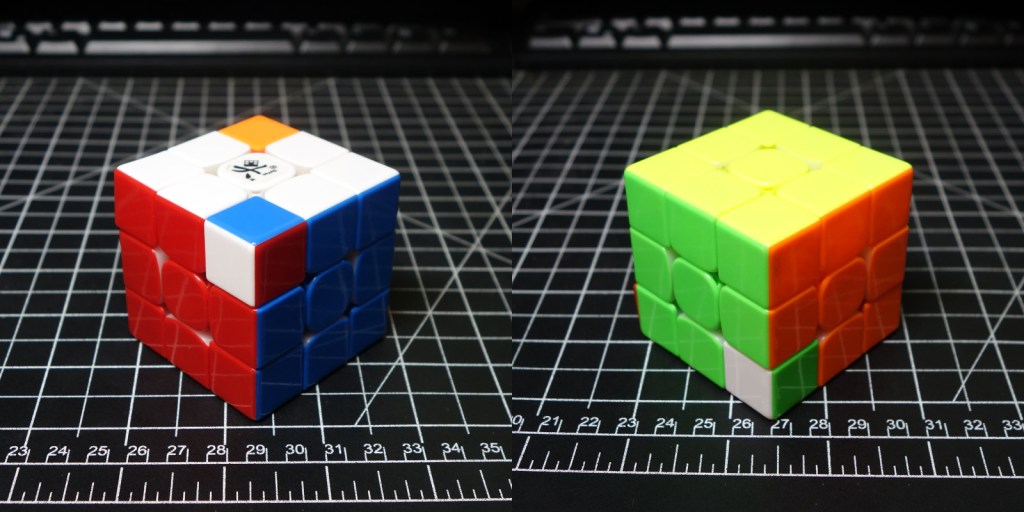

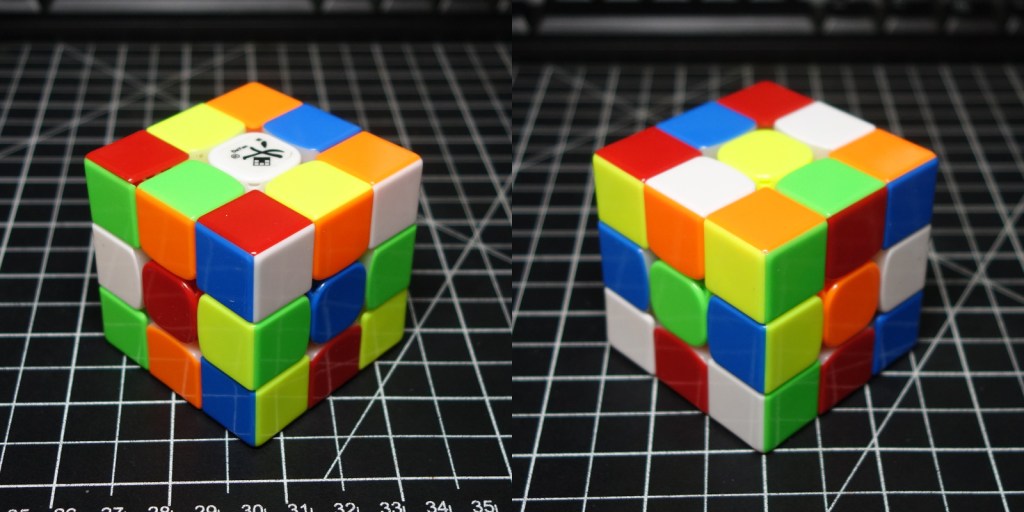

然后,我很快注意到了——确实,在这里你好不好看大家并不 judge。You do you。来 cosplay 的有中年妇女,有顶我一个半那么肥的妹子,还有不少老哥胡子都不刮,一身女仆装或者兔女郎制服,下面踩着运动鞋就来了。现场 cosplay 的男同胞不少,但认真出女装的凤毛麟角。有一部分男性角色,女性角色基本上是搞笑级的,以至于让我都产生了自己还不错的错觉,虽然我要是在国内的话可能都难看得出圈了——好歹我凑齐了一整套还化了妆啊。

有个来找我合影的店员小姐姐印证了我的判断——”Oh I like your outfit! I always told the guys to dress up like this but no one does that …”

以及,你 cosplay 什么角色,大家也不 judge。一天下来我见到的能认出来的黄油角色就只有我自己。Yet no one bats an eye。

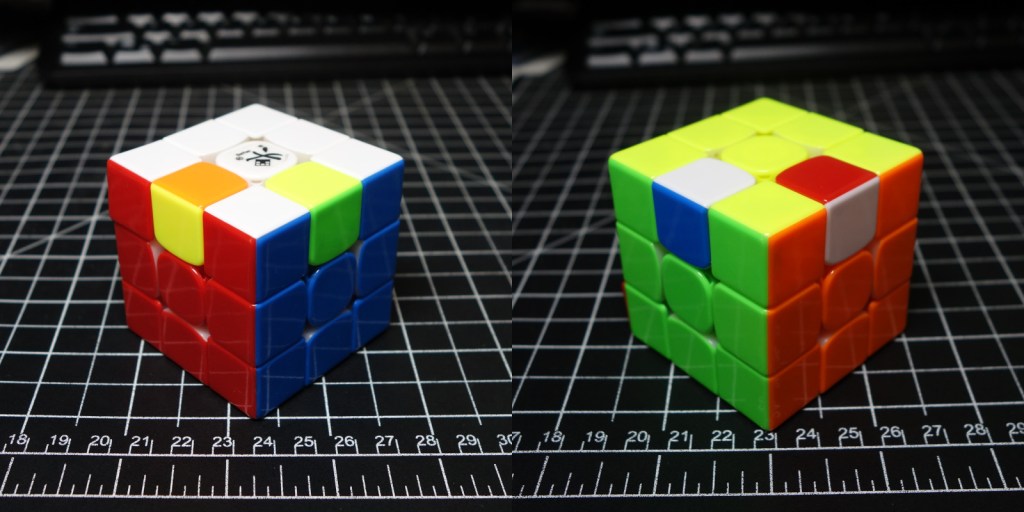

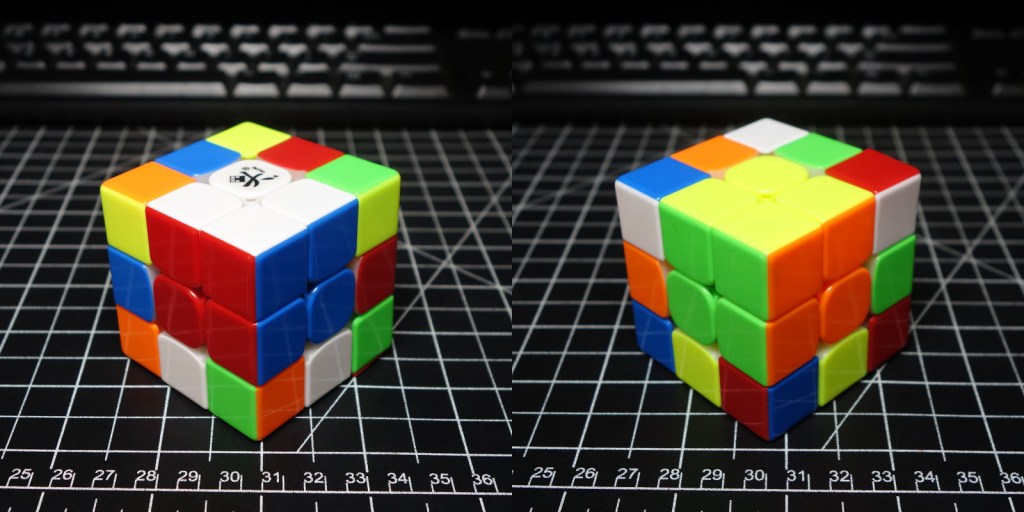

我在推上发了一张现场自拍,还有热心推友来指导,说你问题很多这样对待 cosplay 态度不认真是不对的云云。说心里话我非常同意。因为我也深信无论什么事情既然做了就要做好。虽然我自己也不咋样,但看见那些甚至都没有为做好 cosplay 做最基本的努力的哥们的时候我也很不爽。

但另一方面,我扮猫娘,没有人来 judge 我,我很快乐。

既然如此,我又为什么要 judge 别人呢?

我又回到了那件其实再简单不过的事上。“无论什么事情既然做了就要做好”——它是我进取的动力,也是我痛苦的根源。

这里也不是没有好的 cosplay。很多。但好坏不是关键,因为首先,用“好”和“坏”来作为评价标准就完全搞错了。大家来这的目的是 have fun。并不是人人都得做到放在 OnlyFans 上能卖出去的程度。不是说好和坏没有分别——无论是从感官上还是从技术上,我想什么是好的 cosplay 大概不难划定。然而当我们用“好”和“坏”去决定一个人是否可以 have fun 的时候,fun 就没有了。

如果我很丑,那么根据标准,因为我做的不好,我毫无价值,我不应该 have fun。如果我很漂亮呢?其实如果我很漂亮而你很丑,按理说你丑跟我有什么关系?但是我付出了很多努力,我做的很好,我有资格 have fun。你这么丑,你没有资格 have fun。但你居然想 have fun,你破坏了我的优越感,我很生气。于是最后我也没有 have fun。

多么不幸啊。

而如果大家并不去 judge 自己和他人,那么大家都能 have fun。很简单的道理。然而我无数次栽在这里。即便没有人在 judge 我,我无时不刻不在 judge 自己。我一定要把所有的事情都按照做得好或不好来评价,而且如果做得不好,那么就不能 have fun。最终的结果就是把 hobby 都变成了 work,我的生活中没有一丁点的 fun。But I just can’t change that. It has been drilled into me.

给大家讲个笑话。读博以后我就再也没有把 Nekopara 拿来撸管了。倒不是因为我对巧克力和香草的喜欢是柏拉图式的。只是因为我从来不觉得自己是个好 PhD。You failed your biggest goal, therefore you can’t have your biggest fun. Simple as that.

但我拒绝承担全部责任。我们的国家这么卷,肯定不是我一个人的错。我充其量只是错得有点离谱了。

凡事都要比一比。要分个好坏。要有量化标准。然后就变成了刷分。考试成绩也好,钱也好,粉丝数也好,paper 引用量也好。数字挂帅。没达到标准?Sorry, no fun for you.

不仅没有了 fun,而且好像把一切行为都变成了 a means to an end,刷分刷到最后竟然连 the end 是什么都已经忘了。

说到这里我又想起了生成 AI。生成 AI 能代替人类画师吗?

不能。

因为生成 AI 是 a means to an end,而画画是 an end。A means to an end 不能代替 an end。

因为生成 AI 是以量化方式评价的,你的 FID 又或者 clip score 多么好,blah blah blah,而画画是 have fun。量化的评价不能代替 fun。

因为艺术是一种自我表达。一个生成 AI 无论多么牛逼,它生成的东西不可能是我的自我表达。

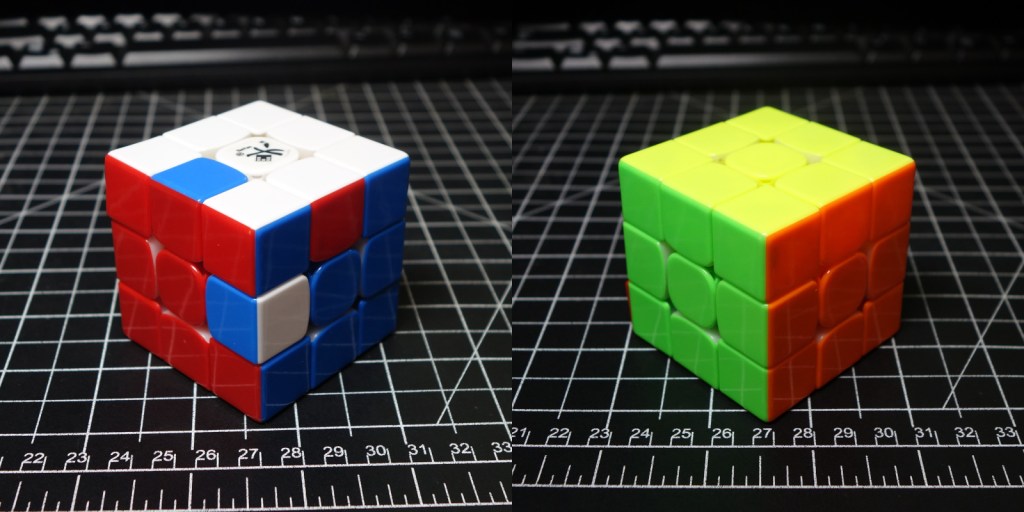

Anime Expo 上,Artist Alley 是最拥挤的展区。摊主有画得很棒的,也有技术稍差一点的。但这不重要,因为他们不是参加美术比赛来的。他们也不必担心 AI 会抢了他们的饭碗。他们是来 have fun。

这种对待 art 的态度的不同,从美术课上就很能体现出来。我小的时候学过素描。在国内学素描的都有这个经历,从石膏几何体开始,然后静物,石膏的五官模型,然后石膏头像,最后画人。按部就班。我从来就没有跨过静物这一步。

来南加大之后我又选了几门美术课。一个学期上到三分之二,就讲了基本的光影,画了两三张静物,然后直接画人。期末我给前老板画了个头像,粉彩的,得了A。

国内就是一切按标准来。考级。画得“对”或者“不对”。最后就是画得最好的人画得都一样。美国呢?不存在“画得不对”这一说。即便画得不好也是特别的。波士顿有个 Museum of Bad Art。

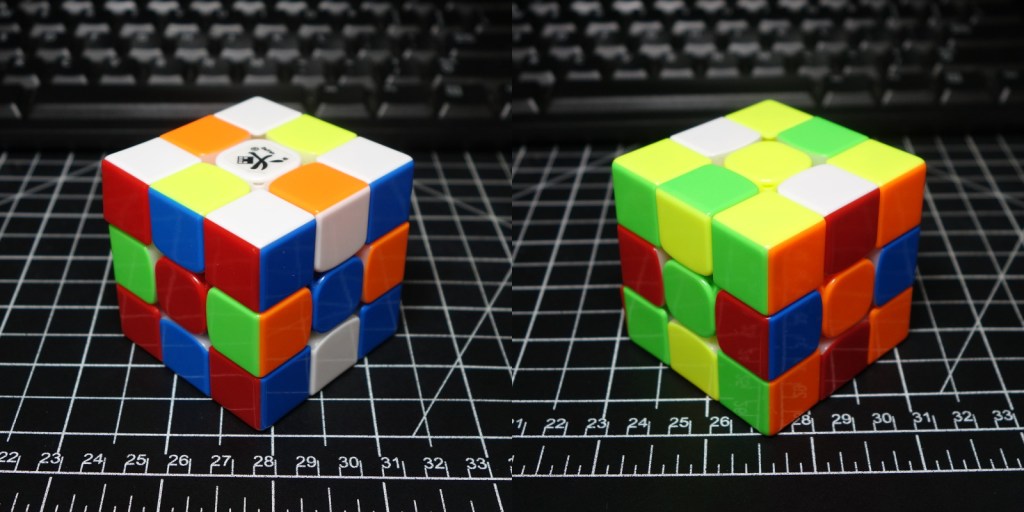

归根结底啊,其实就是不要 care。一切标准都是外部的,但 fun 是自己的。任何外部的标准,不应该阻止我 have fun。

别人做什么,我不要 care。我做什么,也不要别人 care,如果有人偏要 care,我可以不 give a fuck。只可惜国内的风气是大家普遍都很 care,那么不 give a fuck 的成本就会太高。文化决定的吧。

嘛……大概国内那个样子也不全是坏的?卷得厉害是真的,但国内出来的基础也很扎实。

但卷到头了难免遇到 existential crisis。

我又想到给我上天文学课的教授。他很有 passion。有一天上课的时候他说,”Does it matter cosmologically whether I get up and go to work today? No.”并没有人问既然如此他为什么要来上班,他也没有说。Maybe existential crisis is not a thing for him.

现在想想,正因为 in the grand scheme of things nothing matters,所以 you shouldn’t care。他很有 passion,他喜欢给他的学生讲课,这足够了。他从来没提过他是霍金的学生。It doesn’t matter and you shouldn’t care。

我也曾经不 care。初一的时候我成绩倒数。初二的时候我连作业都不写。初三分流考试我是全班第一。

自从高一得了 NOI 银牌之后,我开始 care。我从此陷进了泥潭。把青春都耗尽了,都不知道自己在干什么。

《庄子》开篇就是《逍遥游》。”carefree wandering”。很对。要达到天地境界,首先不要 care。

做研究也好,画画扮猫娘也好,不要在意那么多。I’m 30 and not getting any younger, but it’s never too late.

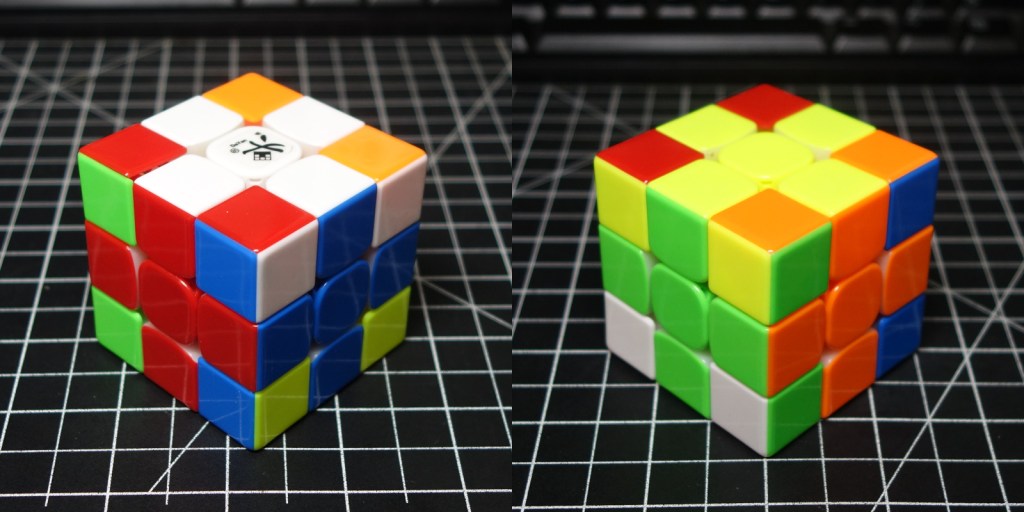

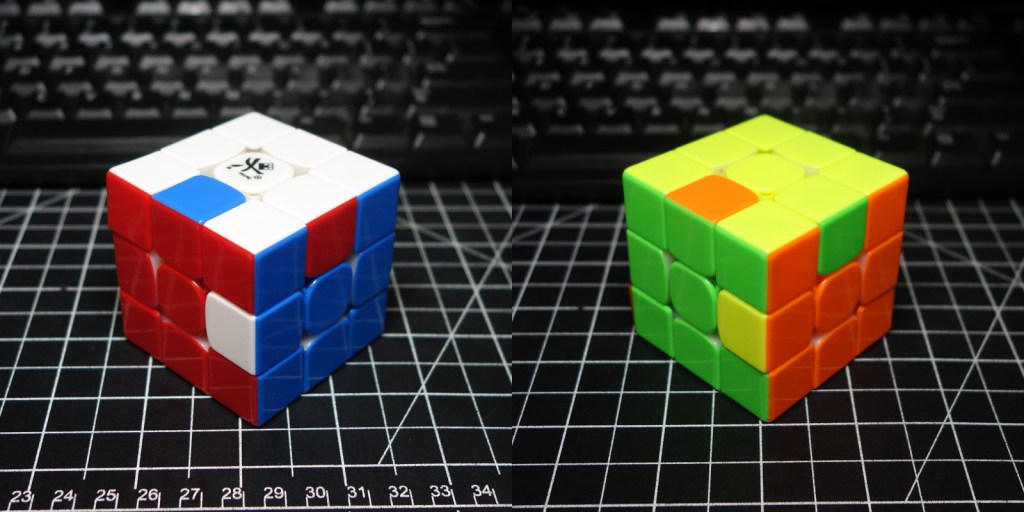

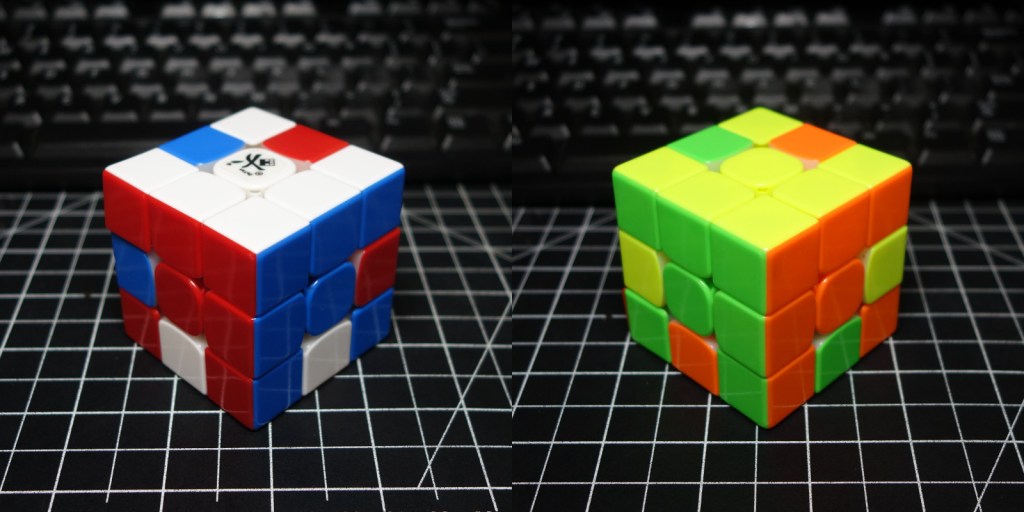

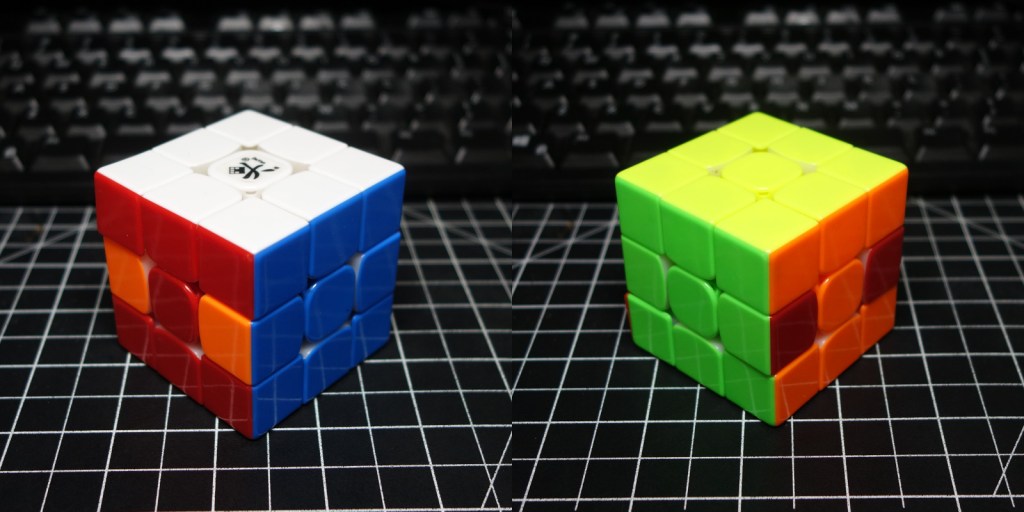

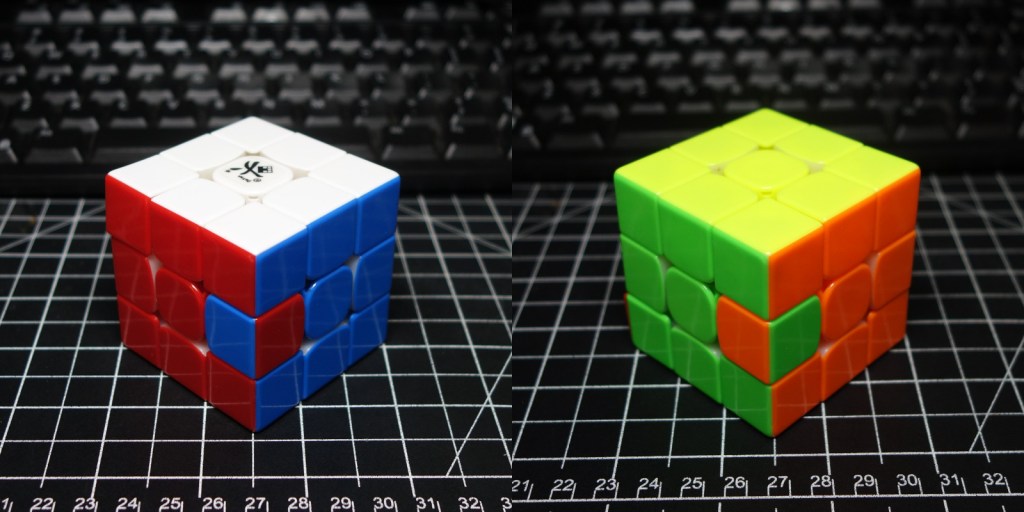

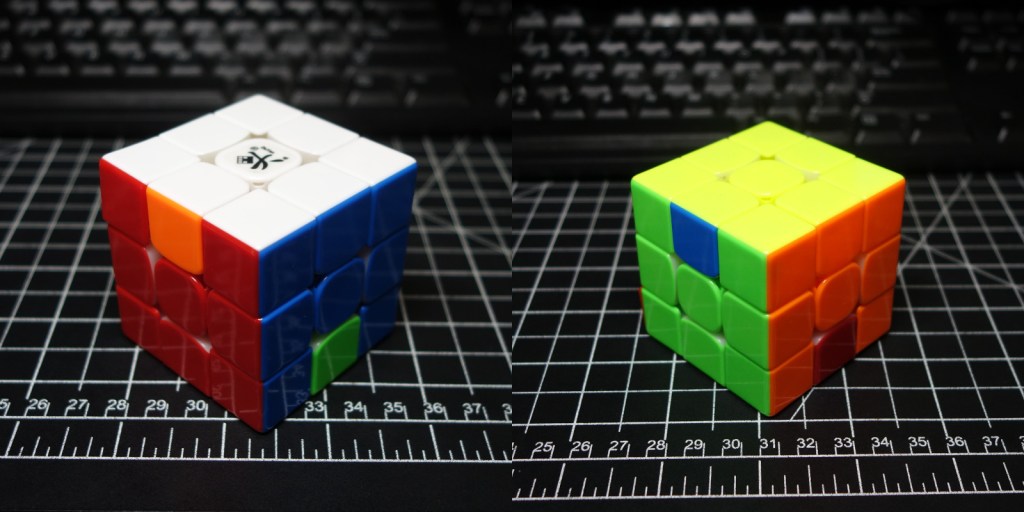

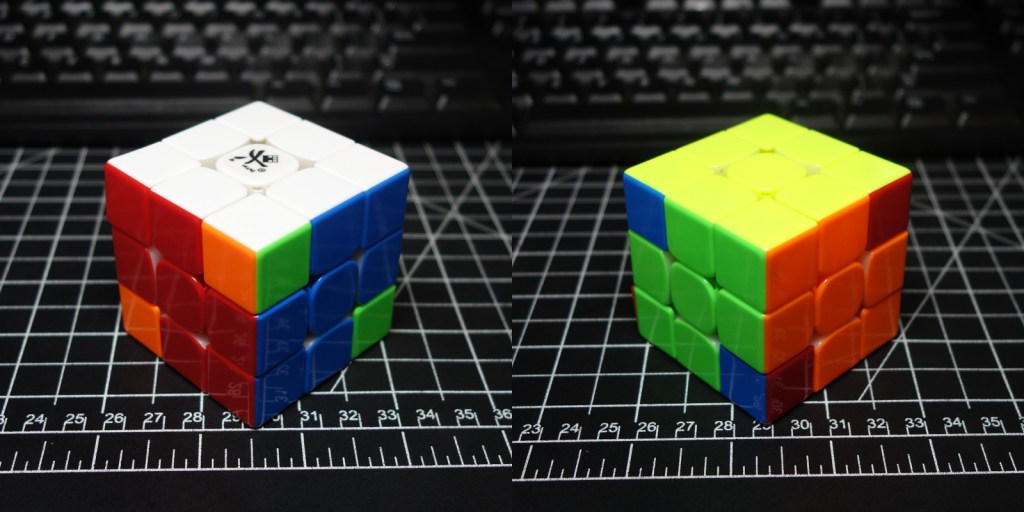

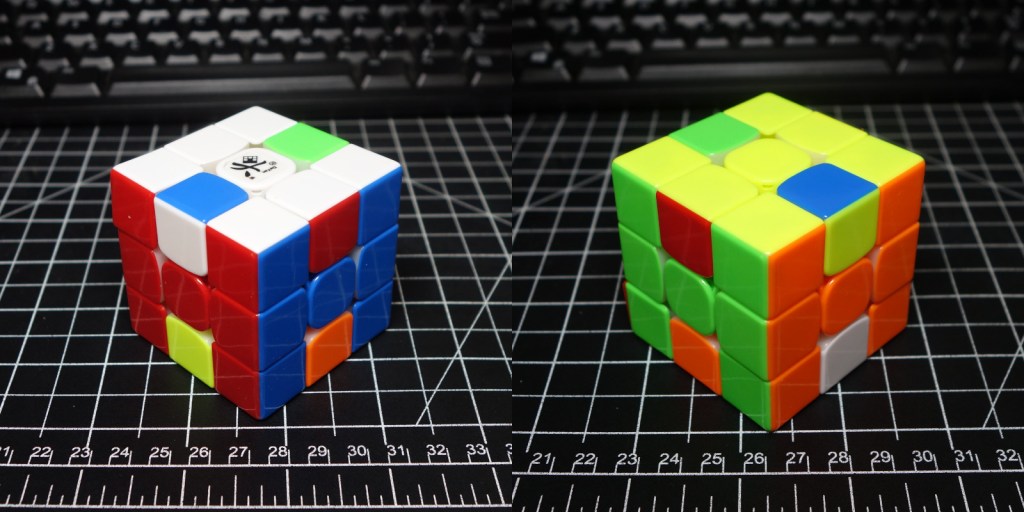

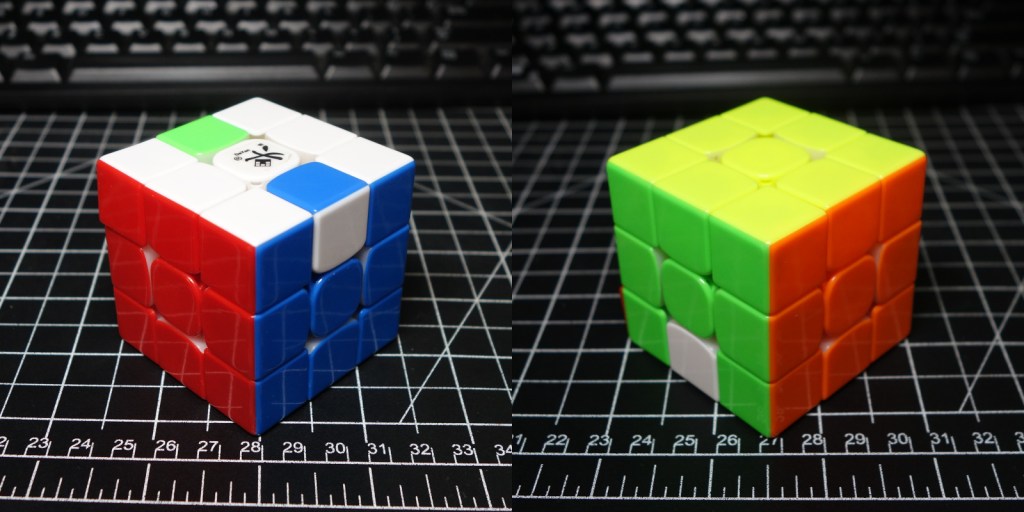

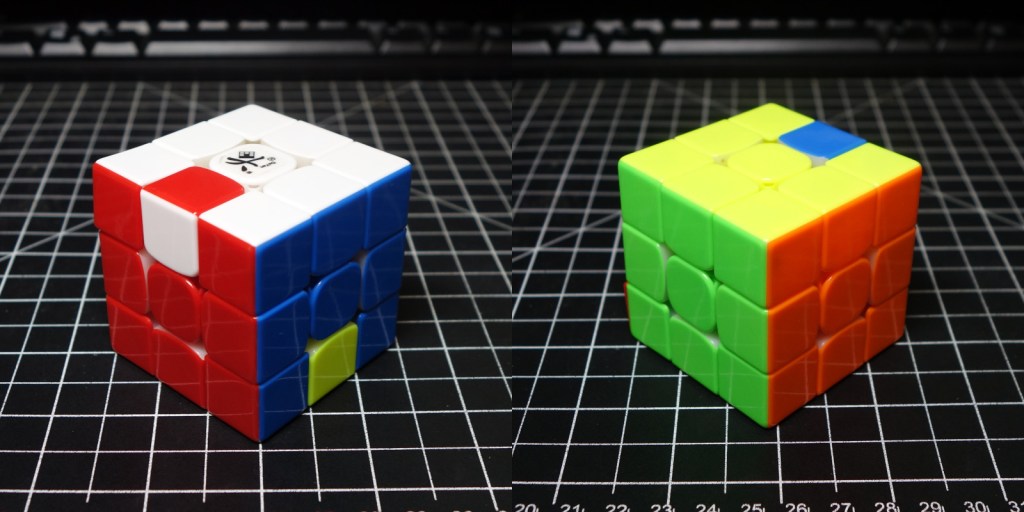

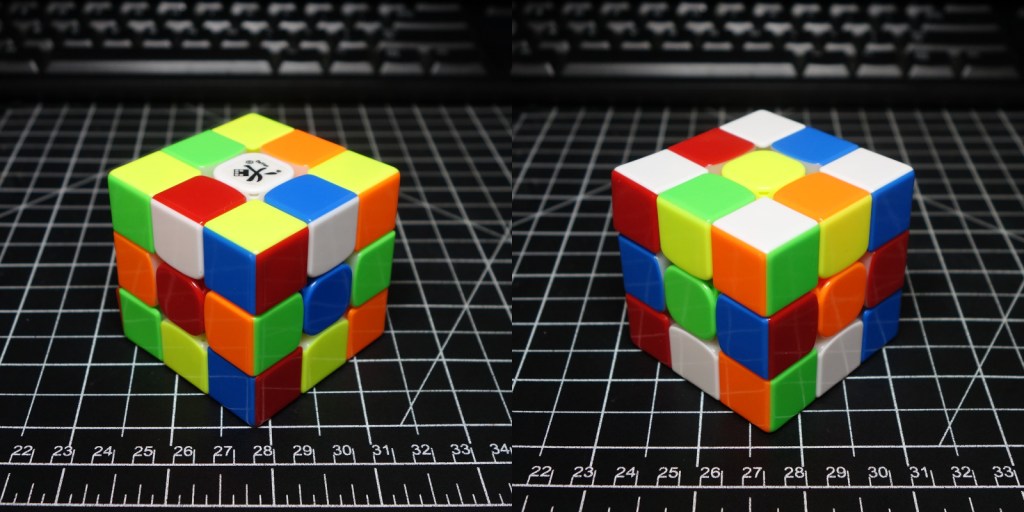

我 cosplay 巧克力去参加了 Anime Expo。

我好看吗?不怎么好看。

But I’m learning to not care, and so should you.